I kind of want to do a crazy project like an AI powered game for my cat.

I want my program to recognize when my cat takes some kind of action, and do something in response. Ideally, the game would learn to amuse my cat, and tell if she is amused by looking at her.

In that vein, I decided the first step would be to get a python program to interact with my webcam. Using the python OpenCV package (cv2) I was able to get a snapshot from my webcam.

Here is that code:

webcam_snapshot.py

#webcam_snapshot.py

import cv2

# Open the webcam (0 usually corresponds to the first webcam device)

cap = cv2.VideoCapture(0)

# Check if the webcam is opened correctly

if not cap.isOpened():

print("Error: Could not open webcam.")

exit()

# Capture one frame

ret, frame = cap.read()

if ret:

# Save the captured image

cv2.imwrite('webcam_image.jpg', frame)

print("Image saved as 'webcam_image.jpg'.")

else:

print("Error: Failed to capture image.")

# Release the webcam and close any OpenCV windows

cap.release()

cv2.destroyAllWindows()Running it generates a file webcam_image.jpg

Now let’s see if we can get it to detect some kind of motion.

Here is the code:

webcam_capture_snap_upon_motion.py

#webcam_capture_snap_upon_motion.py

import cv2

import numpy as np

sensitivity = 50

# Initialize the webcam

cap = cv2.VideoCapture(0)

# Check if the webcam is opened correctly

if not cap.isOpened():

print("Error: Could not open webcam.")

exit()

# Read the first frame

ret, prev_frame = cap.read()

if not ret:

print("Error: Failed to capture image.")

exit()

# Convert the first frame to grayscale

prev_gray = cv2.cvtColor(prev_frame, cv2.COLOR_BGR2GRAY)

while True:

# Capture a new frame

ret, frame = cap.read()

if not ret:

print("Error: Failed to capture image.")

break

# Convert the current frame to grayscale

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

# Compute the absolute difference between the previous and current frame

frame_diff = cv2.absdiff(prev_gray, gray)

# Threshold the difference to get the regions with significant motion

_, thresh = cv2.threshold(frame_diff, sensitivity, 255, cv2.THRESH_BINARY)

# Find contours in the thresholded image

contours, _ = cv2.findContours(thresh, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

# If any contours are found, that means there is motion

if len(contours) > 0:

# Save the current frame as an image

cv2.imwrite('motion_detected_image.jpg', frame)

print("Motion detected! Image saved as 'motion_detected_image.jpg'.")

# Optional: break the loop after capturing the snapshot

break

# Display the frame (for debugging purposes)

cv2.imshow('Motion Detection', thresh)

# Update the previous frame for the next loop

prev_gray = gray

# Check for 'q' to quit the program

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# Release the webcam and close any OpenCV windows

cap.release()

cv2.destroyAllWindows()

I pointed the webcam at the wall. With the sensitivity set to 25, it triggered right away, before it should have. With the sensitivity set to 50, it sat there and waited until I moved my hand in front of the camera.

Now let’s see if we can get it to recognize a human face.

We will use the pre-trained Haar Cascade Classifier for face detection from OpenCV’s data. I downloaded it from the link below and kept the file in the directory with the python script.

https://github.com/opencv/opencv/raw/master/data/haarcascades/haarcascade_frontalface_default.xml

Here is the script:

webcam_capture_snap_upon_human_face.py

# webcam_capture_snap_upon_human_face.py

import cv2

# Path to the downloaded Haar Cascade XML file

face_cascade_path = './haarcascade_frontalface_default.xml'

# Initialize the face cascade

face_cascade = cv2.CascadeClassifier(face_cascade_path)

# Initialize the webcam

cap = cv2.VideoCapture(0)

# Check if the webcam is opened correctly

if not cap.isOpened():

print("Error: Could not open webcam.")

exit()

while True:

# Capture a new frame

ret, frame = cap.read()

if not ret:

print("Error: Failed to capture image.")

break

# Convert the frame to grayscale (Haar Cascade works on grayscale images)

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

# Detect faces in the grayscale frame

faces = face_cascade.detectMultiScale(gray, scaleFactor=1.1, minNeighbors=5, minSize=(30, 30))

# If faces are detected, take a snapshot

if len(faces) > 0:

# Draw rectangles around detected faces (for debugging/visualization purposes)

for (x, y, w, h) in faces:

cv2.rectangle(frame, (x, y), (x + w, y + h), (255, 0, 0), 2)

# Save the frame with a detected face as an image

cv2.imwrite('face_detected_image.jpg', frame)

print("Face detected! Image saved as 'face_detected_image.jpg'.")

# Optional: break the loop after capturing the snapshot

break

# Display the frame with face detection (for debugging/visualization purposes)

cv2.imshow('Face Detection', frame)

# Check for 'q' to quit the program

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# Release the webcam and close any OpenCV windows

cap.release()

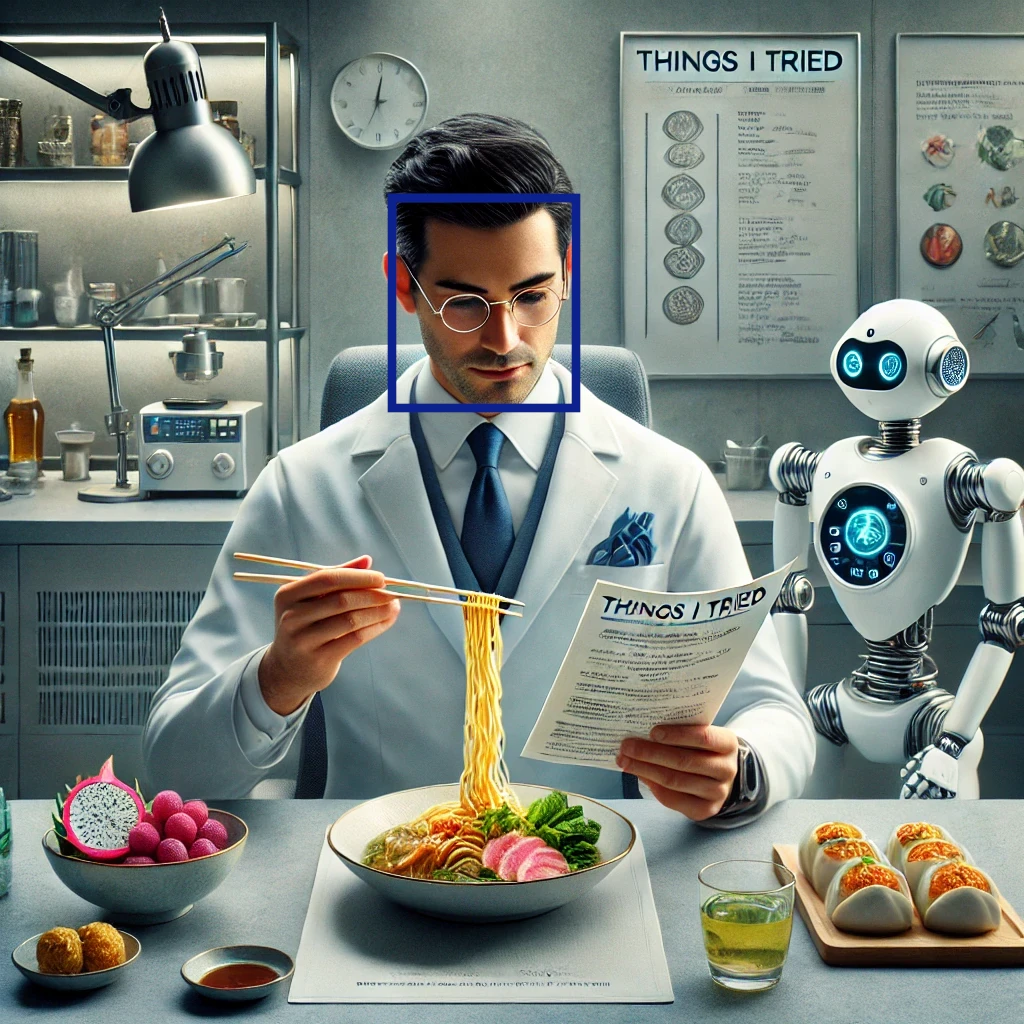

cv2.destroyAllWindows()I started the script with the webcam facing the wall. It waited. I picked up the webcam and slowly turned it toward me. When it could see my face, it snapped my beautiful mug. Cool.

However, upon further testing, I realized that my messy closet of hanging clothes could also trigger it. I guess there may be a hidden face in there somewhere. Let’s see if we can tweak the sensitivity.

By jacking up the minNeighbors and the minSize I was able to seriously reduce the false positives, but eventually I started missing the true positives. I settled on these values for now:

faces = face_cascade.detectMultiScale(gray, scaleFactor=1.1, minNeighbors=10, minSize=(50, 50))

There are other strategies for reducing the false positives, including using more robust models.

I didn’t manage to create a game for my cat yet, but I am happy to be using AI for facial recognition. The possibilities abound.